Day 5. Principles

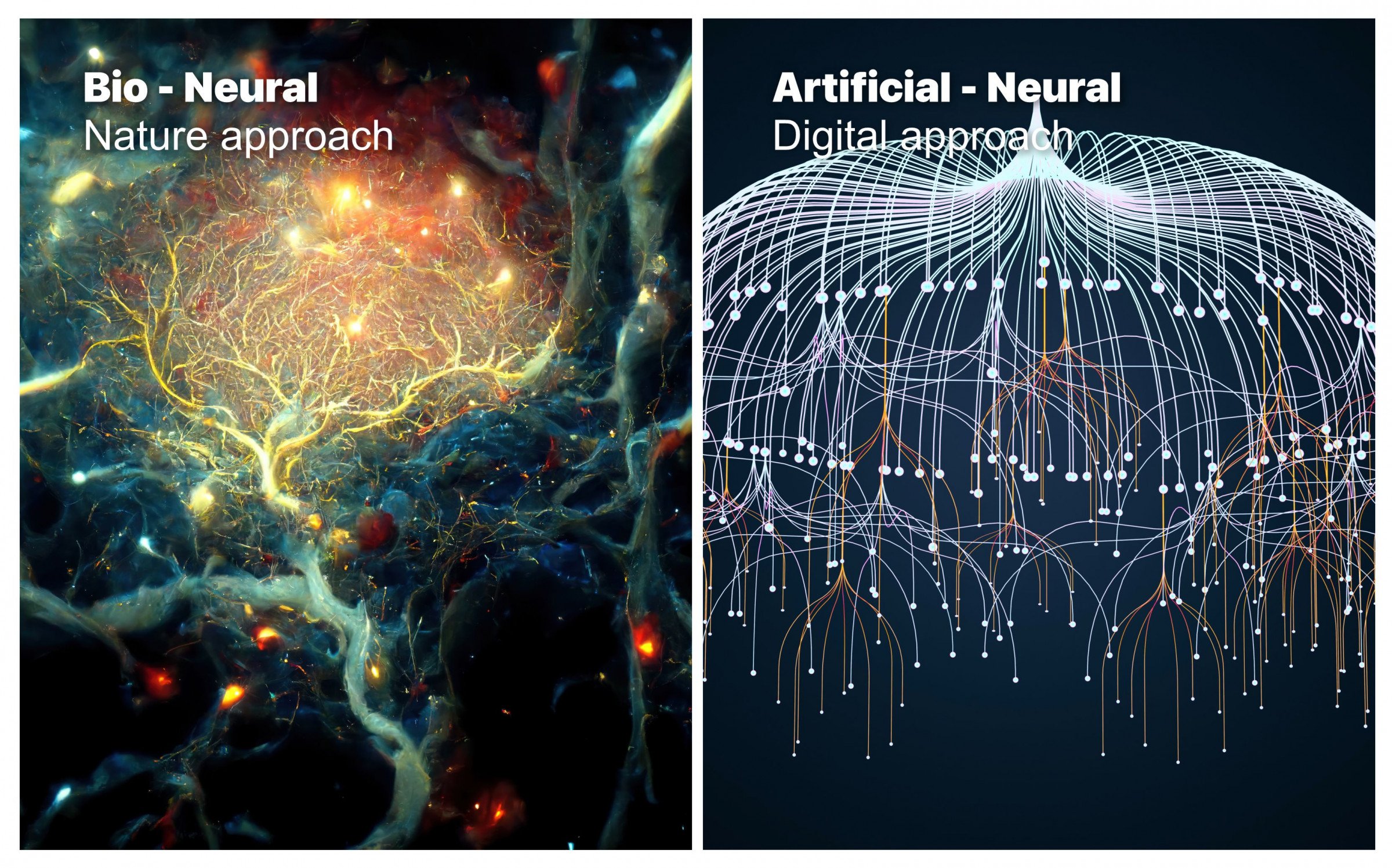

Artificial neural networks, or ANNs, are a subset of machine learning algorithms modeled after the structure and function of the human brain. The basic building block of an ANN is the artificial neuron, which receives input, processes it, and produces an output. These neurons are organized into layers, with the input layer receiving raw data, the hidden layers processing the data, and the output layer producing the final result.

Get insights

Deep Learning State of the Art – Lex Fridman

Understand Tensorflow 2.0 and Python from zero in 7 hours 🤯

More practice

The spelled-out intro to language modeling: building makemore - Andrej Karpathy

Building makemore Part 2: MLP - Andrej Karpathy

Activation & Gradients

An important principle of ANNs is the use of non-linear activation functions. These functions, such as the sigmoid or rectified linear unit (ReLU) functions, introduce non-linearity into the network, allowing it to model complex relationships in the data.

Building makemore Part 3: Activations & Gradients, BatchNorm - Andrej Karpathy

Backpropagation

One of the fundamental principles of ANNs is that they are able to learn from data. This is achieved through a process called backpropagation, where the network is fed a set of input-output pairs and the error between the predicted output and the actual output is calculated. This error is then propagated back through the network, adjusting the weights of the neurons in order to minimize the overall error.

Building makemore Part 4: Becoming a Backprop Ninja - Andrej Karpathy (Essential)

Deep dive

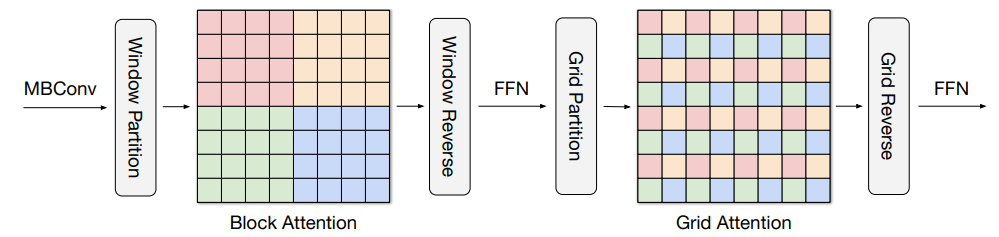

The architecture of an ANN is also crucial for its performance. A popular architecture is the feedforward neural network, where the information flows in one direction from the input layer to the output layer. Another important architecture is the convolutional neural network (CNN), which is particularly suited for image and video analysis.

Overfitting is another important aspect in the architecture, it's when the model is trained too much on the training dataset, and it performs poorly on the test dataset. It's a common problem and it's can be solved by using regularization techniques like L1 and L2 regularization or by using dropout.

A recent development in ANNs is the use of deep learning, where multiple layers of processing are used to extract high-level features from the data. This has led to significant improvements in performance in tasks such as image and speech recognition. For more information on the principles of ANNs, I would recommend checking out the following articles:

- "A Few Useful Things to Know About Machine Learning" by Pedro Domingos

- "Deep Learning" by Yoshua Bengio, Ian Goodfellow, and Aaron Courville

- "Efficient BackProp" by Yann LeCun

- "ImageNet Classification with Deep Convolutional Neural Networks" by Alex Krizhevsky, Ilya Sutskever and Geoffrey Hinton

These articles provide a deeper understanding of the fundamental principles behind the artificial neural networks and the recent advancements in the field.

Make Your Business Online By The Best No—Code & No—Plugin Solution In The Market.

30 Day Money-Back Guarantee

Say goodbye to your low online sales rate!