Day 2: Introduction to Neural Networks

Understanding what a neural network is and how it works

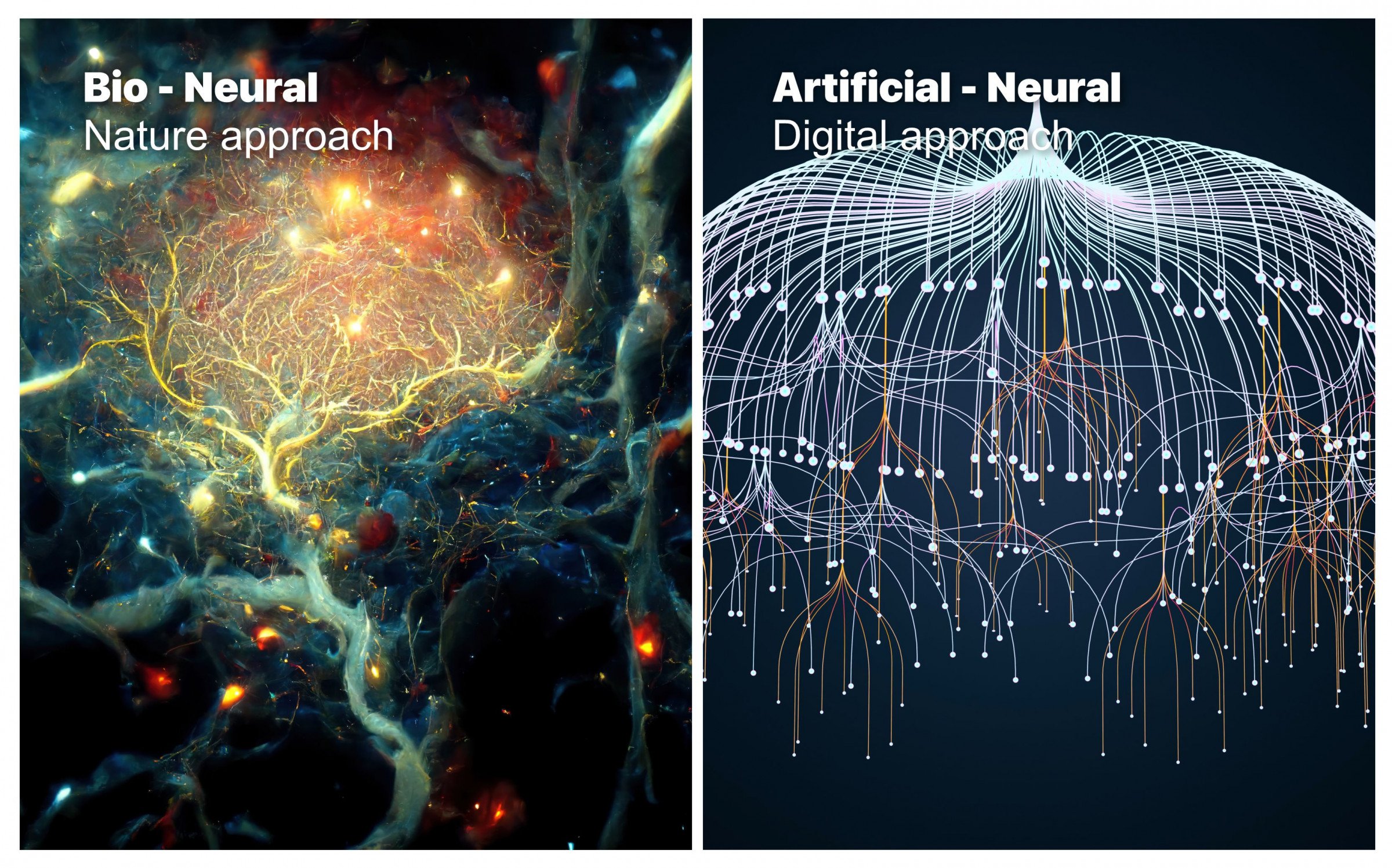

Neural networks, also known as artificial neural networks (ANNs), are a type of machine-learning model inspired by the structure and function of the human brain. They are used to analyze and process large sets of complex data, such as images, speech, and natural language. At a high level, a neural network is composed of layers of interconnected "neurons," which process and transmit information. Each neuron receives input from other neurons, performs a mathematical operation on that input, and then sends the output to other neurons in the next layer.

The most basic type of neural network is the single-layer perceptron, which consists of a single layer of output neurons connected to one or more layers of input neurons. The perceptron's output is determined by a simple mathematical function of its input. More complex neural networks, such as deep neural networks, have multiple layers of neurons and are able to learn and represent much more intricate patterns in the data. These networks are called "deep" because of the number of layers in the network.

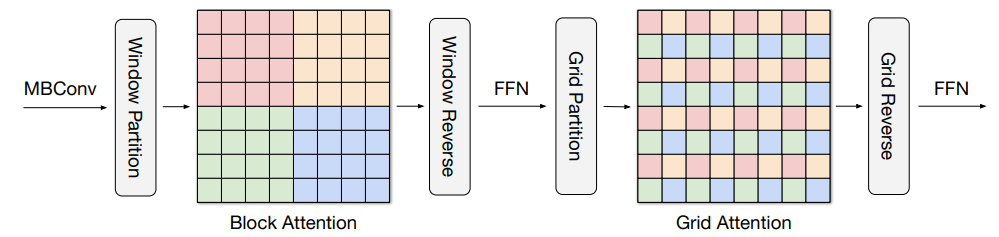

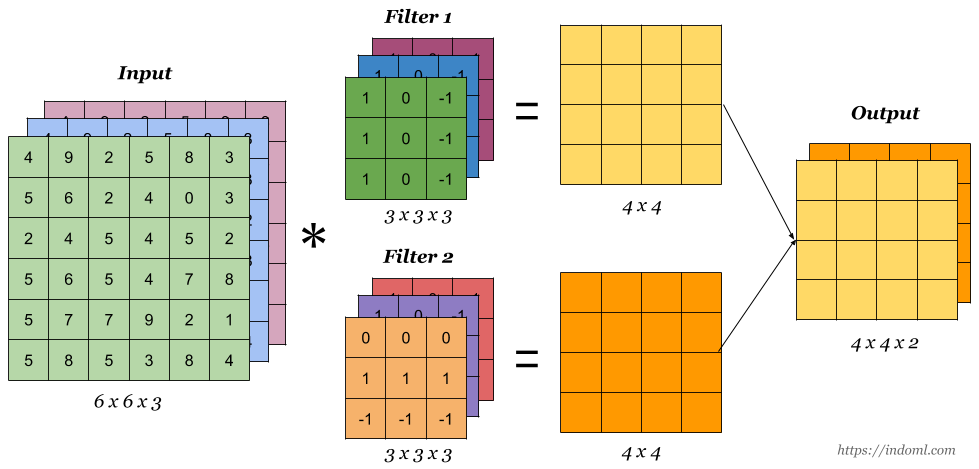

A key aspect of neural networks is the ability to learn from data. The weights of the connections between the neurons are adjusted during the training process to minimize the error of the network's predictions. This process, called backpropagation, uses gradient descent to iteratively update the weights in order to minimize the error. One of the most popular uses of neural networks is in image recognition tasks, such as identifying objects in photographs or videos. Convolutional neural networks (CNNs) are a type of deep neural network particularly well-suited to image recognition tasks. These networks use convolutional layers, which scan the image with a small filter and extract features, and pooling layers, which downsample the image to reduce the computational cost.

Another popular use of neural networks is natural language processing (NLP), where the goal is to understand and generate human language. Recurrent neural networks (RNNs) and long short-term memory networks (LSTMs) are types of neural networks that are well-suited to NLP tasks. These networks are able to process sequences of data, such as sentences or paragraphs, and can handle the complex dependencies and structure of language. In conclusion, neural networks are powerful machine learning models that are inspired by the structure and function of the human brain. They are capable of analyzing and processing large sets of complex data and are used in a wide range of applications such as image recognition, natural language processing, and even playing games.

Bio Neuron - The Building Blocks of Neural Networks | What is a neuron?

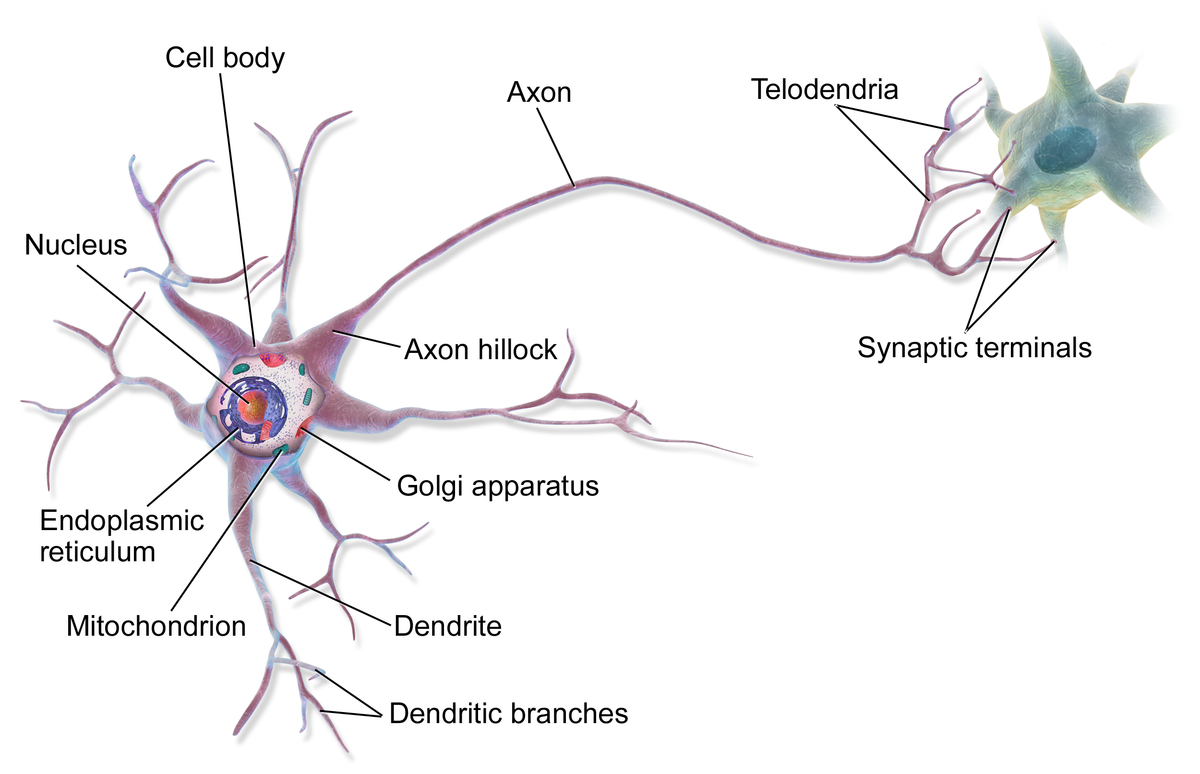

A neuron is the fundamental building block of neural networks. It is modeled after the biological neuron, the basic unit of communication in the nervous system of animals, including humans. Neurons receive inputs from other neurons, process that information, and then send the output to other neurons.

The structure of a biological neuron is composed of several key parts: the soma (cell body), dendrites, and axon. The soma contains the cell's nucleus and other organelles, and it integrates the input from the dendrites. Dendrites are tree-like structures that receive electrical signals from other neurons and transmit them to the soma. The axon is a long, thin structure that sends electrical signals to other neurons or muscle cells. The signal is transmitted via synapses, small gaps between the axon and dendrites.

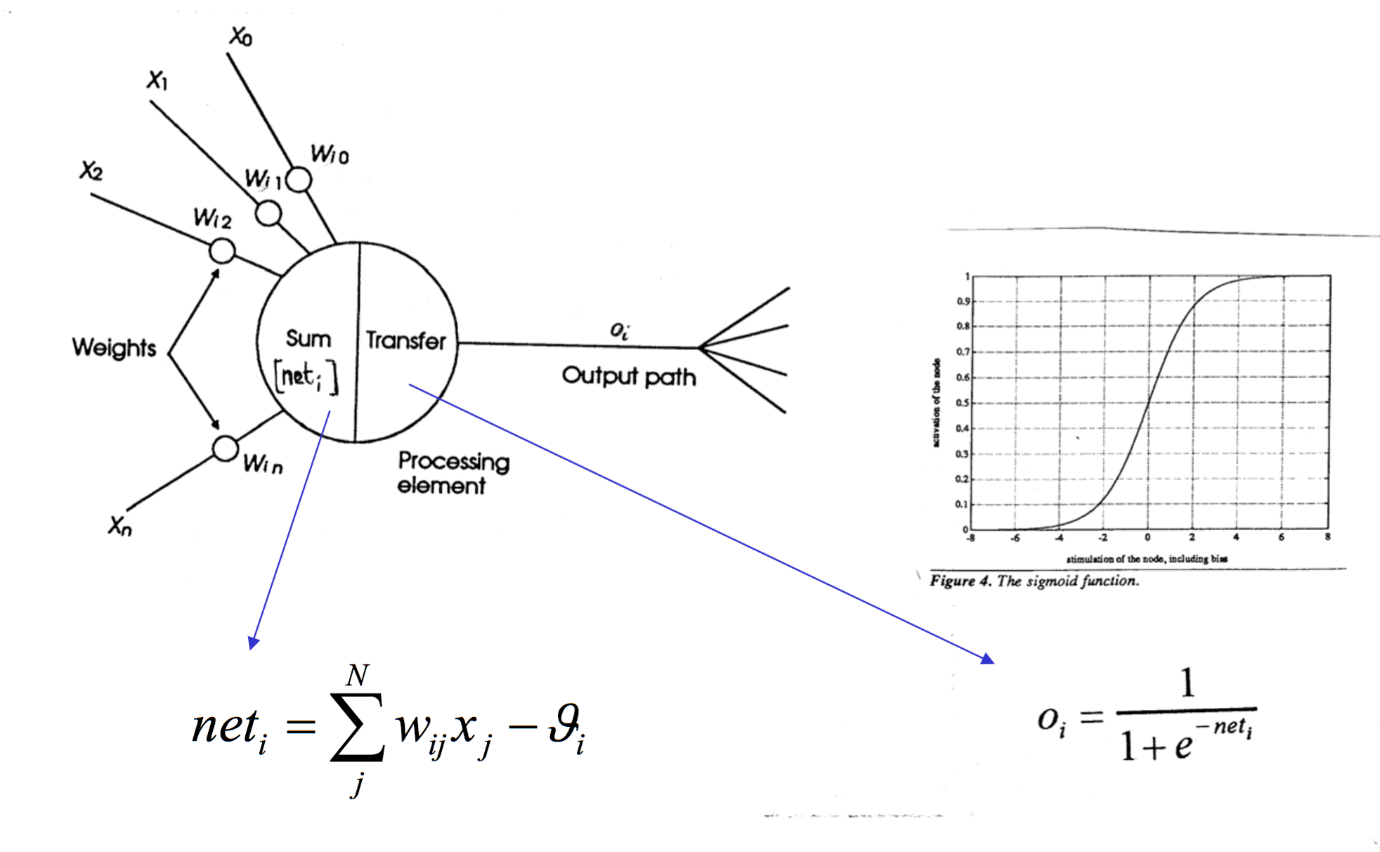

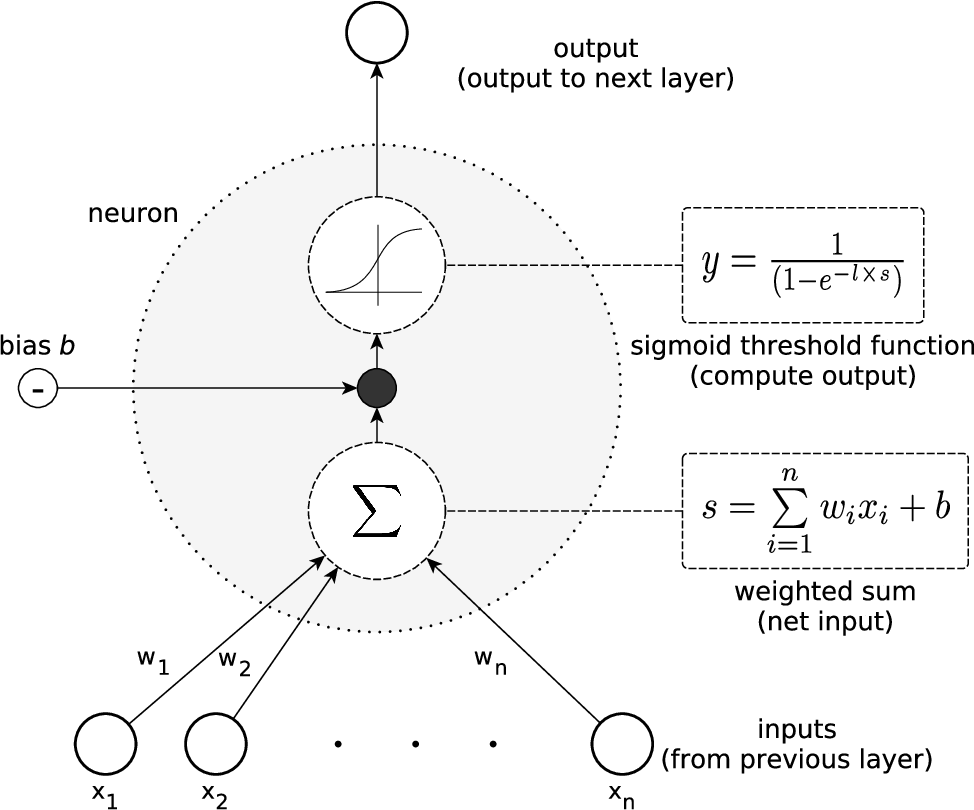

In a neural network, the functionality of a neuron is modeled mathematically. Each neuron receives inputs from other neurons, multiplies each input by a weight (a scalar value that represents the strength of the connection), and then sums the weighted inputs. This sum is then passed through an activation function, which outputs the neuron's output. The activation function is used to introduce non-linearity in the network.

The study of the brain and how it works is a complex and active field with many interesting and important areas of research. Here are a few hot topics in the field:

- Neuroplasticity: the ability of the brain to change and adapt in response to experience or injury.

- Neurogenesis: the process of generating new neurons in the brain, which was once thought to be limited to early development but now thought to occur throughout life

- Synaptogenesis: the formation and remodeling of synapses, the connections between neurons

- Neuroprosthetics: the development of devices to replace or enhance the function of the nervous system

Perceptron - The Building Blocks of Artificial Neural Networks | What is perceptron?

A perceptron is a type of single-layer neural network that was first proposed in the 1950s by Frank Rosenblatt. It is considered the simplest type of artificial neural network and is used for supervised learning tasks, such as binary classification.

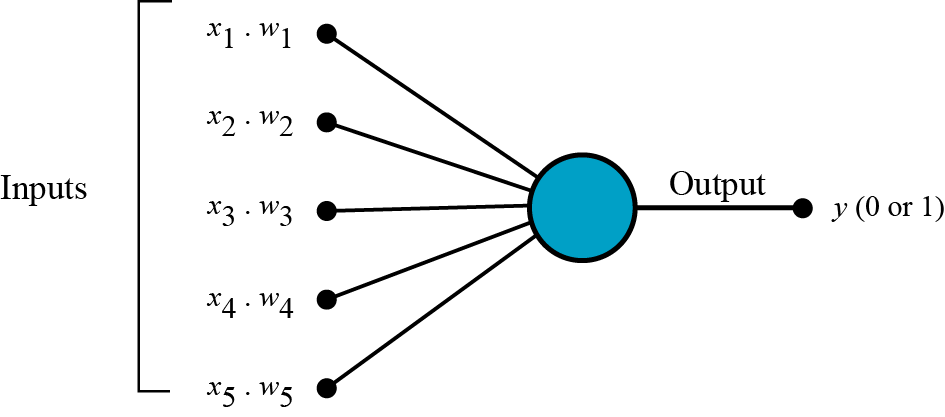

The basic structure of a perceptron consists of a single layer of output neurons connected to one or more layers of input neurons. The input layer receives the input data, and each input neuron is connected to the output neuron via a weight, which represents the strength of the connection between them. The output of the perceptron is determined by a simple mathematical function, called the activation function, that takes the weighted sum of the inputs as input. Common activation functions used in perceptrons include the step function and the sigmoid function.

The step function returns a binary output, either 1 or 0, depending on whether the input is greater than a threshold value. The sigmoid function, on the other hand, returns a continuous output between 0 and 1. The output of the sigmoid function can be interpreted as a probability of the input belonging to a certain class.

Perceptrons are trained using a technique called the perceptron learning rule, which adjusts the weights of the connections between the neurons in order to minimize the error of the network's predictions. The learning rule updates the weights in the direction that reduces the error, using gradient descent. Perceptrons are particularly useful for linear classification problems, where the data is linearly separable, meaning it can be separated into two classes by a single linear boundary. However, perceptrons are not able to handle non-linear problems, which is why multi-layer perceptrons and other more complex network architectures have been developed.

There are several different types of neurons that are used in AI and machine learning, each with their own specific characteristics and use cases. Here are a few examples:

Perceptron: As described earlier, a perceptron is a single-layer neural network that is used for supervised learning tasks, such as binary classification. It has a simple structure and uses a linear activation function.

Sigmoid Neuron: The sigmoid neuron is similar to the perceptron but uses a different type of activation function, the sigmoid function. This activation function maps the input to a value between 0 and 1, which can be interpreted as a probability. Sigmoid neurons are commonly used in feedforward networks, such as multi-layer perceptrons.

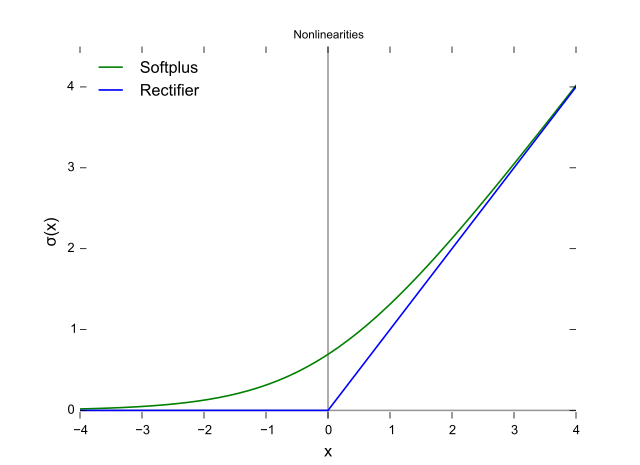

ReLU (Rectified Linear Unit) Neuron: ReLU neurons use a linear activation function that outputs the input if it is positive, and 0 if it is negative. ReLU neurons are widely used in deep learning architectures because they improve the training speed and performance of the networks.

LSTM (Long Short-term Memory) Neuron: LSTM neurons are a type of recurrent neural network (RNN) neuron that is designed to handle sequential data, such as time series or natural language. LSTMs have memory cells that can retain information over a longer period of time, which makes them well-suited for tasks such as language translation or speech recognition.

Convolutional Neuron: Convolutional neurons are used in convolutional neural networks (CNNs), which are a type of deep neural network that is particularly well-suited to image recognition tasks. Convolutional neurons use convolutional layers, which scan the image with a small filter and extract features, and pooling layers, which downsample the image to reduce the computational cost.

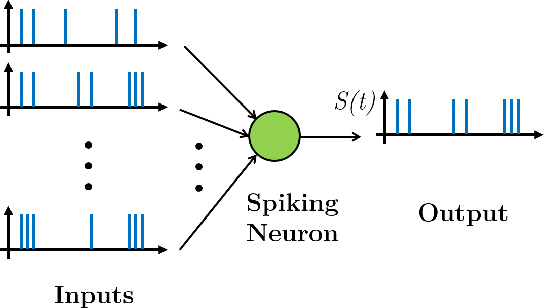

Spiking Neuron: Spiking neurons are a type of artificial neuron that are modeled after biological neurons and are capable of producing outputs like bursts or spike trains. These neurons mimic the behavior of biological neurons and allow the network to be sensitive to the timing of the inputs, which can be useful for certain types of tasks, such as speech recognition and image processing.

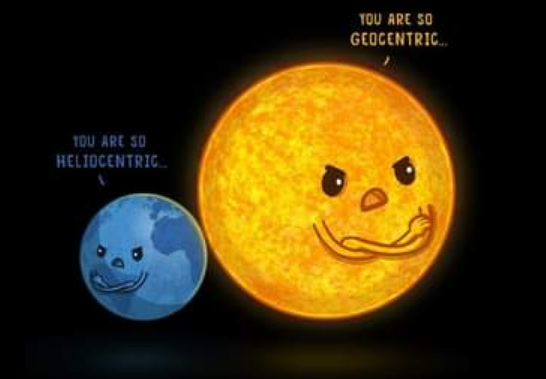

heliocentrism vs egocentrism. Think simple! [Lex Fridman Lecture]

Heliocentrism vs Egocentrism, a classic cosmic debate like "Team Peanut Butter or Team Jelly." For centuries, humans believed that the Earth was the center of the universe, like thinking the mirror in the bathroom is the center of the house. But then came along this Polish dude, Copernicus, who suggested that the Sun was the center and all planets revolve around it, much like how the office coffee pot revolves around the caffeine needs of employees.

Galileo and Kepler also jumped on board with this idea and like true detectives, they observed and gathered evidence to support this new theory. But despite all the evidence, some people still cling to the idea that the Earth is the center, much like how some people cling to their flip phones.

It's important to remember that the universe is like a big jigsaw puzzle, and the most simple solution is usually the correct one. Heliocentrism made more sense and fit all the pieces together. And thanks to this simple solution, we have advancements like the laws of motion and gravity, which are pretty handy for things like not falling off the planet.

In short, heliocentrism is the "sun" of the show, and egocentrism is just a passing "moon."

So Perceptron ≠ Sigmoid≠ LSTM ...≠≠≠ Bio Neuron! But that is okay for today!

It’s the domain of my study in phase-I to solve AI problems.

The output of Neuron

Neurons have burst output, they are able to produce large spike-like outputs known as Action Potential. Neurons also possess memory, as well as the capability of cool-down period for their outputs.

Synchronization

Perceptrons are synchronous, meaning that the output of the network is determined by the input at a specific point in time. In contrast, neurons are asynchronous and can produce outputs at different times, and it’s like a process in different threads!

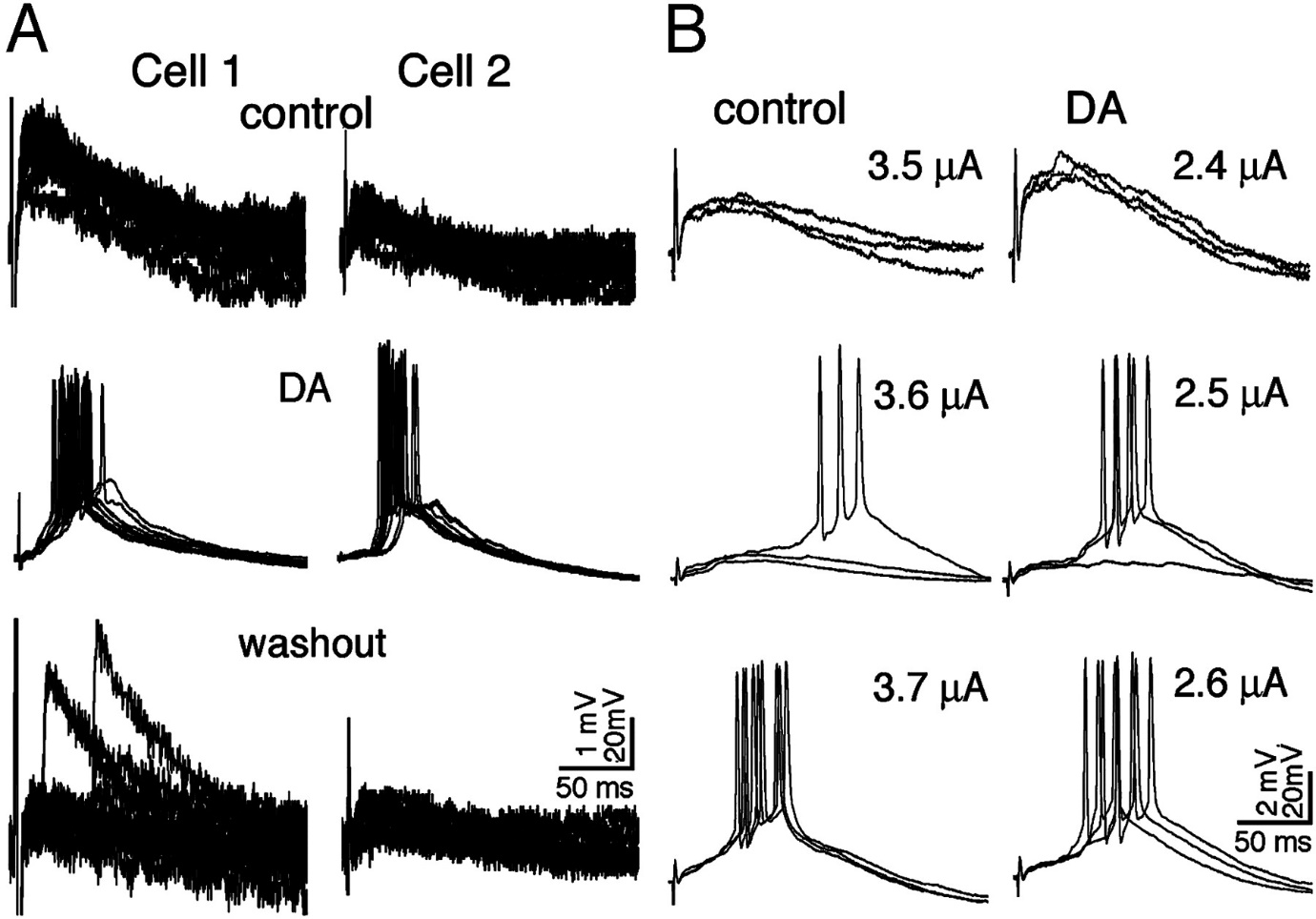

Bifurcation

Bifurcation is a term used in mathematics and physics to describe the behavior of a system when small changes in its parameters lead to large changes in its behavior. In the context of neurons, bifurcation refers to the changes in the behavior of a neuron as its parameters, such as the strength of its inputs or the properties of its membrane, are varied.

Bifurcation theory is the branch of mathematics that studies the qualitative changes in the behavior of a system as its parameters are varied. It is a powerful tool for understanding the behavior of non-linear systems, such as neurons, and has been used to study a wide range of phenomena in physics, chemistry, biology, and engineering.

In the context of neural networks, bifurcation theory has been used to study the behavior of neurons as the strength of their connections or the properties of their membranes are varied. Researchers have found that many types of neurons exhibit bifurcations, such as sudden changes in the frequency or amplitude of their firing, as their parameters are varied.

One of the key mathematicians in the field of bifurcation theory is René Thom. He is known for his work in developing catastrophe theory, a branch of bifurcation theory that studies how systems behave as they undergo sudden changes, or "catastrophes." Thom's work has been applied to a wide range of fields, including neuroscience, and has helped researchers understand how neurons and neural networks function. Bifurcation is the phenomenon of changes in the behavior of a system when small changes in its parameters lead to large changes in its behavior. Bifurcation theory is a powerful mathematical tool to study the behavior of non-linear systems like neurons, and it has been used by mathematicians like René Thom to study neural systems. The results have been of huge importance in neuroscience as it helps to understand how neurons fire, how networks of neurons works and overall behavior of neural networks.

The structure of a basic neural network

The structure of a basic neural network is a key aspect of its function and performance. Neural networks are composed of layers of interconnected "neurons," which process and transmit information. The structure of a neural network determines how information flows through the network and how the network learns from data.

There are several different types of neural network structures that have been proposed and used over the years, each with its own strengths and weaknesses. Here are a few examples of neural network structures and their pros and cons:

1. Single-layer Perceptron: The simplest type of neural network, a single-layer perceptron consists of a single layer of output neurons connected to one or more layers of input neurons. Pros: simplicity and easy to implement. Cons: limited to linear classification problems, not able to handle non-linear problems. (Proposed in the 1950s)

2. Multi-layer Perceptron (MLP): A more complex neural network than the single-layer perceptron, the multi-layer perceptron consists of multiple layers of neurons and can be used to handle non-linear problems. Pros: more powerful than single-layer perceptrons, able to handle non-linear problems. Cons: requires more computational resources, more difficult to train. (Proposed in the 1960s)

3. Convolutional Neural Networks (CNNs): A type of deep neural network that is particularly well-suited to image recognition tasks. It uses convolutional layers, which scan the image with a small filter and extract features, and pooling layers, which downsample the image to reduce the computational cost. Pros: effective for image and video processing tasks, efficient use of parameters. Cons: more computation resources required, prone to overfitting. (Proposed in the 1980s)

4. Recurrent Neural Networks (RNNs): A type of neural network that is able to process sequences of data, such as speech or text. RNNs can handle complex dependencies and structure of the data and retain memory of past events. Pros: powerful in sequential data such as speech or text, able to learn long-term dependencies. Cons: difficult to train, computationally expensive. (Proposed in the 1980s)

5. Long Short-term Memory (LSTM) Networks: A variation of RNN that introduce memory cells to improve the ability to store and retrieve information over longer periods of time. Pros: better performance in sequential data than RNN.

Make Your Business Online By The Best No—Code & No—Plugin Solution In The Market.

30 Day Money-Back Guarantee

Say goodbye to your low online sales rate!